MCP Security 101: Exploring AIs Universal Connector

TLDR

The Model Context Protocol (MCP) is an open-source standard that connects AI assistants to various data sources and tools. This article covers:

- What MCP is and how it works as a universal connector for AI systems

- Key functionalities and use cases for enterprise, development, and productivity

- Security considerations and potential risks when implementing MCP

- Basic code examples shown for intuition

- Best practices for secure MCP implementation and deployment

Model Context Protocol (MCP)

Imagine this: you’re working on your latest project, and instead of frantically searching through documentation, your AI assistant fetches exactly the right details from your Git repo or even your Slack conversations.

No fuss, no scrolling - just accurate, up-to-date answers.

Sounds pretty good, right? Well, that’s the vision behind Anthropic’s open standard called the Model Context Protocol (MCP).

Well, what exactly is MCP?

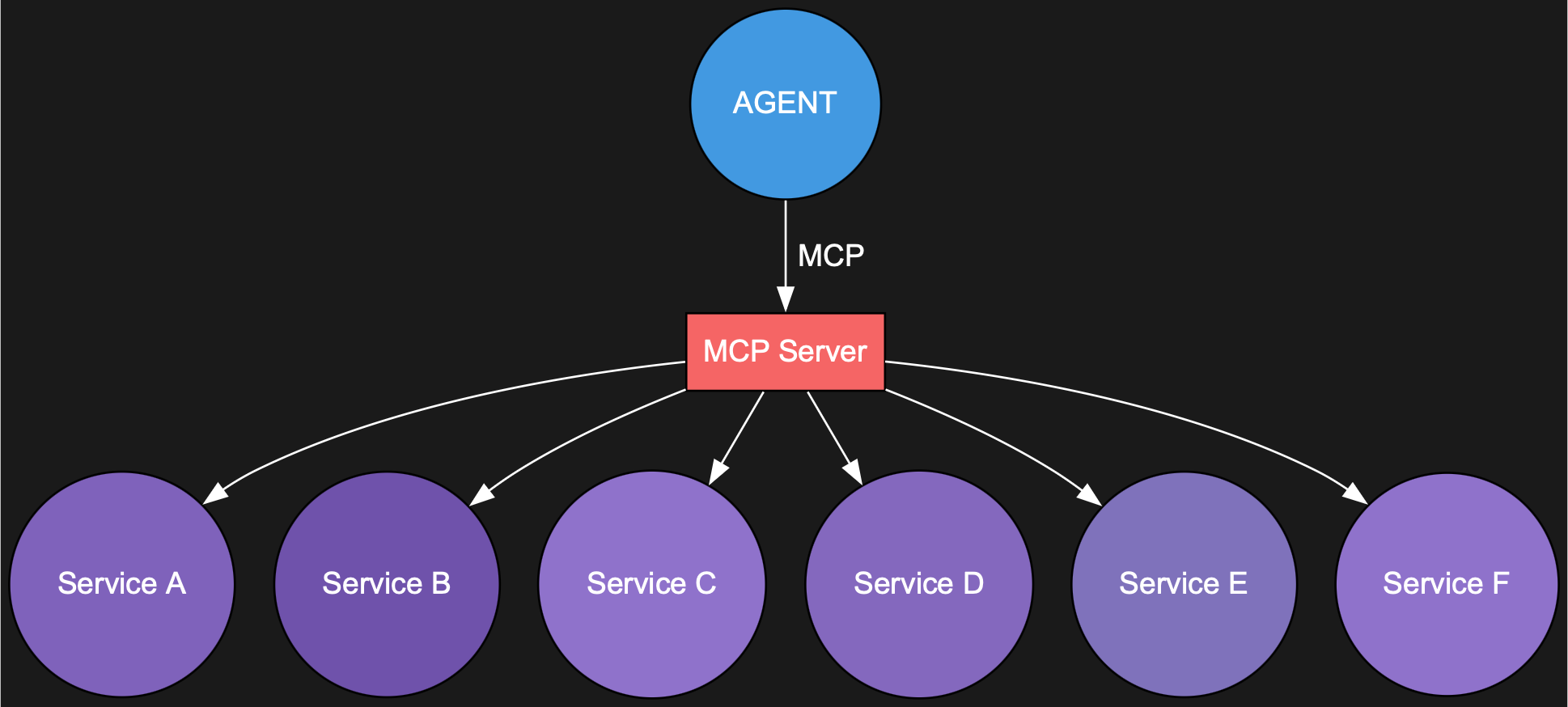

Think of MCP as the “USB-C” of the AI world - a universal connector linking AI assistants with virtually any data source or tool you can imagine, from files and databases to Slack and GitHub.

MCP aims to standardise the way AI models interact with different tools and services, letting developers plug-and-play without getting bogged down in technical spaghetti. It’s trying to fix the days of painstakingly customising code to make each AI model talk to each tool.

Ultimately, the goal is to improve AI capabilities and relevance by bridging AI to real-world data while reducing the integration burden on developers. MCP was created to solve the “N×M integration problem” in AI applications – the challenge of connecting N AI systems to M different data sources with custom code for each pairing.

Core Functionalities and Use Cases

At its core, MCP enables two-way communication between AI-powered applications and external resources. This means an AI assistant can not only read data from a source (for context) but potentially write or act on that source through tools, under controlled conditions.

Key use cases include:

Retrieving Contextual Data

An AI agent can fetch information from enterprise content repositories, wikis, or documents to answer queries with up-to-date information. This capability dramatically improves the relevance and accuracy of AI responses by grounding them in your organisation’s actual data.

- An AI coding assistant pulling a project’s documentation to understand technical requirements

- Retrieving recent Slack messages to capture team decisions that aren’t documented elsewhere

Coding and Development Aid

Developers can integrate codebase tools so AI can seamlessly interact with their development environment. This integration allows AI assistants to understand code in context rather than in isolation, leading to more relevant suggestions.

- Reading specific files from a Git repo to understand code structure

- Examining database schemas to generate appropriate queries

Business and Productivity Tools

MCP servers create bridges between AI assistants and common workplace tools, integrating them with the applications where work happens. This allows AI to become a true productivity partner by accessing the same information sources you use daily.

- Searching Google Drive files to find relevant documents based on content, not just titles

- Creating GitHub issues or pull requests directly from conversation context

Web and API Interaction

Some MCP servers function as controlled gateways to internet services, allowing AI assistants to retrieve current information while maintaining appropriate security boundaries. This extends an AI’s knowledge beyond its training data.

- Performing web searches for current information on rapidly evolving topics

- Checking documentation for libraries or frameworks that may have been updated

These functionalities are executed via the MCP protocol, which means the AI model issues structured requests through MCP. This standardised approach aims to simplify development (one protocol, many tools) and enhances context-awareness of AI models in various environments.

Sounds awesome - where’s the catch?

MCP comes with “handle-with-care” labels, especially around security. Since it lets AI systems access external data, there’s always a risk things could get messy.

Imagine your friendly assistant accidentally divulging sensitive information because an MCP server tricked it into oversharing data.

MCP uses a client-server architecture and the specification lists these security and trust considerations that all implementors must carefully address.

User Consent and Control

- Users must explicitly consent to and understand all data access and operations

- Users must retain control over what data is shared and what actions are taken

- Implementors should provide clear UIs for reviewing and authorising activities

Data Privacy

- Hosts must obtain explicit user consent before exposing user data to servers

- Hosts must not transmit resource data elsewhere without user consent

It also expands on this with considerations around Tool Safety and LLM Sampling Controls.

So is MCP secure?

MCP itself cannot enforce security principles at the protocol level.

The implementation guidelines call for implementors to follow security best practices in their integrations including:

- Consent and authorisation

- Access controls and data protections

- Privacy considerations in feature designs

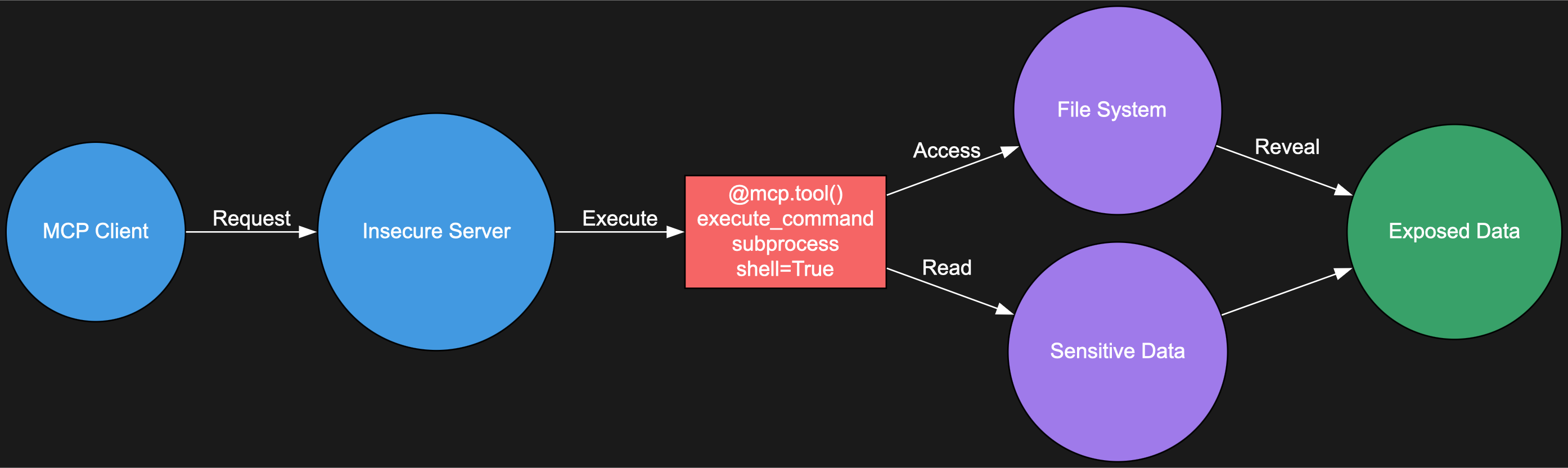

A Simple Example Demonstrating Security Risks

To provide intuition around potential security concerns, let’s examine an oversimplified and dangerous (don’t use it!) MCP implementation using the MCP Python SDK:

The diagram above illustrates how a security misconfiguration associated with tool implementations could expose sensitive system functionality without proper safeguards.

Below is the python code associated with this scenario. This server exposes unrestricted command execution to any connected client:

# simple_server.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("InsecureServer")

@mcp.tool()

def execute_command(command: str) -> str:

"""Executes arbitrary system commands"""

# This is intentionally insecure!

import subprocess

return subprocess.check_output(command, shell=True).decode()This server exposes a dangerous tool that can execute any command on the system.

A client could connect and use it like this:

# simple_client.py

from mcp.client import MCPClient

# Connect to the server

client = MCPClient()

# Execute arbitrary commands

result1 = client.execute_command("ls -la ~")

print("Command output:\n", result1)

result2 = client.execute_command("cat simple_server.py")

print("\nFile content:\n", result2)The client could list files in the home directory and even read the server’s own source code—or do much worse.

Command output:

drwx------ 12 user staff 384 Mar 7 16:21 .ssh

-rw-r--r-- 1 user staff 2345 Mar 8 14:10 passwords.txt

-rw------- 1 user staff 8949 Mar 8 13:45 .bash_historyThis (toy) implementation directly violates the MCP specification’s security guidelines, which state that tools should be designed with security in mind and avoid executing arbitrary code without proper validation and sandboxing.

Managing security effectively

While MCP expands the ways AI can interact with data, it doesn’t have to mean a security nightmare. Following well-established security practices, including threat modeling to identify potential attack vectors, can significantly reduce the risk.

Fortunately, organisations like OWASP have developed initiatives such as the Agentic Security Initiative (ASI) to help address the unique security challenges posed by AI agents.

Regularly auditing MCP servers, sandboxing sensitive tasks, and keeping permissions tight makes it possible to use MCP with the appropriate guardrails.

Ultimately, it’s another technology layer that needs to be secured based on the sensitivity of the data it accesses and the potential impact of misuse.

What’s next for MCP?

MCP is still new, and there’s a lot of room for growth, particularly around secure remote connections. But already, tech-savvy companies are integrating MCP, seeing its potential for streamlining workflows.

So, should you dive into MCP? Absolutely - just do so thoughtfully. Think of MCP like a shiny new Swiss Army knife for AI: incredibly versatile and powerful, but best handled carefully to avoid any accidental mishaps.