Actionable Defences Against AI-Powered Phishing

TLDR

- Modern phishing combines LLM-generated text, visual deepfakes, audio deepfakes, and conversational AI

- AI tools are making phishing attacks more sophisticated and harder to detect

- Key defenses include contextual awareness, verification channels, and healthy skepticism

- Threat modeling provides a structured approach to help anticipate and counter AI-enhanced phishing

Actionable Defences Against AI-Powered Phishing

Phishing scams have been around for years and have long served as a primary vector for system compromises and data breaches. But things are changing fast. New AI tools are making these attacks harder to spot and more effective than ever before. This technological shift demands not only awareness but adaptive defence strategies to counter increasingly sophisticated social engineering attempts.

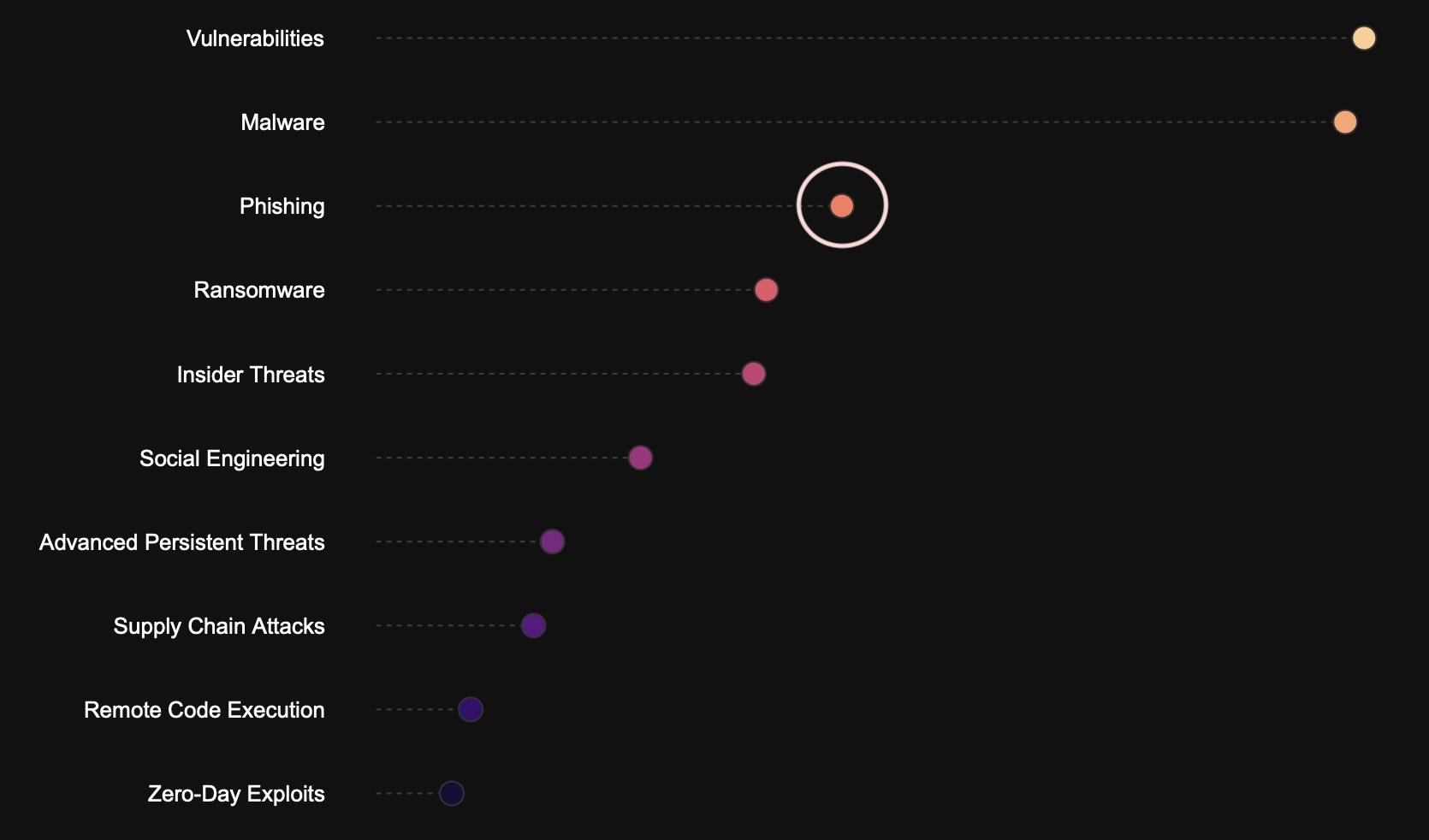

Fig. 1: Phishing ranks among the top common attack vectors, consistently appearing as a major threat in our intelligence data.

The Changing Face of Digital Deception

Traditional phishing attacks have long relied on volume and chance. Cast a wide net and inevitably catch a few unwary victims. Yet what we’re witnessing today represents a fundamental shift. As someone who has worked in cybersecurity for many years, I’ve observed how these attacks have evolved from crude, easily identifiable schemes to sophisticated operations that challenge even the most security-conscious individuals.

The Dual Paths of Phishing

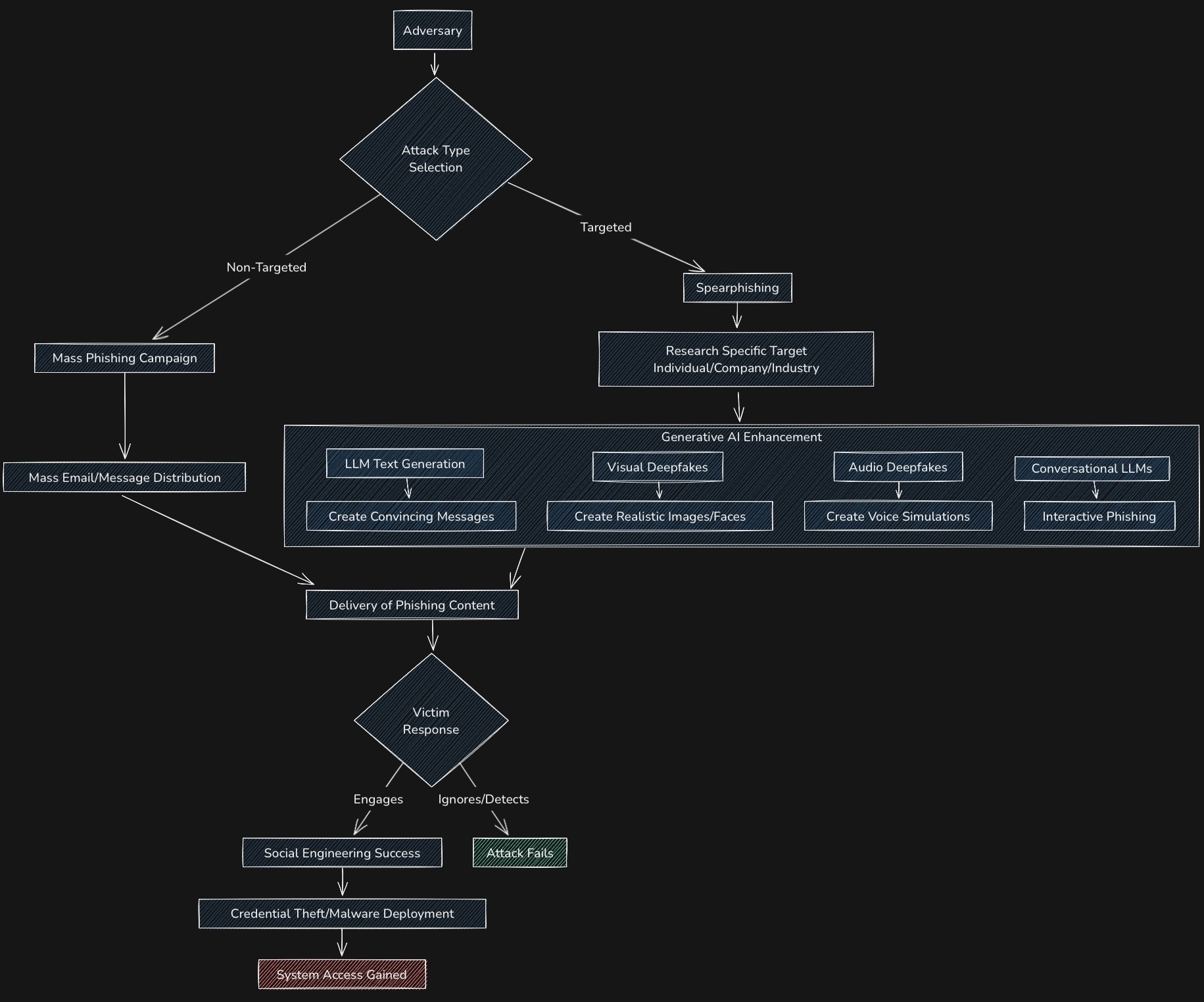

At its core, phishing continues to follow two primary paths: mass phishing campaigns casting wide nets, and targeted spearphishing operations focusing on specific individuals or organisations.

What’s changed is not the destination but how these attacks are crafted, delivered, and executed.

The mass phishing approach has traditionally been hampered by its own inconsistencies—grammatical errors, design flaws, and contextual mistakes that serve as red flags. Spearphishing, while more effective, has been limited by the time and research required to create convincing personalised content.

Generative AI is dismantling both of these limitations simultaneously.

The AI Enhancement Layer

The Generative AI Enhancement section of our flow chart represents a suite of technologies that elevate phishing from craft to industrial-scale deception:

LLM-Generated Text

The awkward phrasing and grammatical errors that once betrayed phishing attempts are disappearing. Large language models can now produce contextually appropriate, grammatically flawless communications that mirror legitimate correspondence.

They can generate messages that precisely mirror organisational styles, craft contextually aware content referencing recent events, produce technical content appearing to come from IT departments, and create multilingual phishing without the telltale linguistic errors that often serve as warning signs.

The ability to generate high-quality deceptive text at scale fundamentally alters the economics of targeted phishing, allowing adversaries to personalise attacks without proportional increases in resource expenditure.

Visual Deepfakes

Our inherent trust in what we see is being exploited through AI-generated images that can place fabricated individuals in convincing scenarios or mimic trusted figures. These technologies can generate photorealistic yet non-existent individuals, manipulate existing images to place targets in false contexts, create synthetic versions of organisational badges and security elements, and produce convincing website interfaces mimicking legitimate login portals.

The visual dimension adds powerful persuasive elements to phishing attempts, bypassing our instinctive trust in visual evidence.

Audio Deepfakes

Voice cloning technology has advanced to the point where a brief sample of someone’s speech can be transformed into a synthetic voice capable of saying anything. This technology enables voice synthesis with minimal sample data, creation of emotional inflections matching the impersonated target, generation of realistic background audio, and even real-time voice modification for live vishing (voice phishing) calls.

The implications are particularly profound, as voice authentication has traditionally served as a trust anchor in many security protocols.

Conversational AI

Perhaps most concerning is the emergence of interactive phishing, where AI systems engage in real-time conversation, adapting to queries and concerns in ways that build trust and overcome hesitation.

Beyond static content, LLMs enable dynamic response generation adapting to target questions, persistent engagement building false trust over time, technical support impersonation with domain-specific knowledge, and meta-prompted systems designed specifically to extract sensitive information.

This conversational capability transforms phishing from a one-shot attempt to a sustained engagement, increasing the probability of eventual success.

How to Protect Yourself and Your Organisation

-

Develop contextual awareness: Instead of focusing solely on the message itself, consider its timing, purpose, and whether it aligns with expected communications. Be particularly vigilant during unusual communication patterns, such as experiencing “spam bombing” where adversaries flood your inbox with thousands of messages - often serving as a smokescreen for targeted phishing attempts hidden among the noise.

-

Establish verification channels: Create out-of-band verification methods for sensitive requests or information. A quick phone call through an authenticated channel can prevent significant compromise. Traditional authentication mechanisms increasingly require supplementation with continuous behavioural analysis and multi-factor approaches resistant to social engineering.

-

Cultivate healthy scepticism: Trust less, verify more. Double-check unusual requests through different channels. The most effective defence remains a questioning mindset. Security awareness programmes must evolve beyond teaching users to identify grammatical errors toward more sophisticated contextual awareness.

-

Invest in continuous learning: The threat landscape evolves daily. Commit to understanding emerging threats and defence mechanisms as part of your personal and professional development.

While the challenges are substantial, organisations can develop meaningful resilience through implementing zero-trust architectures, developing organisation-specific verification mechanisms, conducting regular red-team exercises employing generative AI, and creating cross-functional response teams with both technical expertise and behavioural insight.

Perhaps most importantly, organisations must foster institutional scepticism—a culture where verification is normalised rather than exceptional, and questioning unexpected requests is rewarded rather than discouraged.

Organisations must foster institutional scepticism—a culture where verification is normalised rather than exceptional, and questioning unexpected requests is rewarded rather than discouraged.

Applying Threat Modeling: Plan Before They Attack

As AI-enhanced phishing continues to evolve, organisations need structured approaches to anticipate and mitigate emerging threats. Threat modeling offers a systematic framework that can significantly strengthen defences against these sophisticated attacks.

What is Threat Modeling?

Threat modeling is a structured approach to identifying potential security threats, vulnerabilities, and attack vectors in your systems and applications. It helps you understand your security risks before they can be exploited, enabling you to implement effective countermeasures. is a structured approach to identifying potential security threats, vulnerabilities, and attack vectors in your systems and applications. It helps you understand your security risks before they can be exploited, enabling you to implement effective countermeasures.

Applying Threat Modeling to AI-Enhanced Phishing

When applied specifically to AI-enhanced phishing, threat modeling provides several key advantages:

- Identifying Valuable Targets

By mapping organisational assets and their value, security teams can predict which individuals or departments are most likely to face sophisticated phishing attempts. Executives, finance personnel, and those with access to intellectual property often warrant enhanced protection and awareness training.

- Anticipating Attack Vectors

Instead of waiting for novel AI-powered techniques to emerge, threat modeling encourages security teams to anticipate how generative AI might be weaponised against specific organisational workflows and communications.

- Uncovering Procedural Vulnerabilities

Beyond technical weaknesses, this approach reveals process vulnerabilities that attackers might exploit-such as predictable approval chains, standard communication templates that could be mimicked, or scenarios where urgent requests bypass normal verification.

- Developing Targeted Countermeasures

Rather than implementing generic anti-phishing tools, threat modeling enables the development of tailored defences aligned with your organisation’s specific risk profile and communication patterns.

Implementing Effective Threat Modeling

To leverage threat modeling against AI-enhanced phishing, hold regular modeling sessions with dev teams during sprint planning and design phases help identify potential vulnerabilities before they become embedded in production systems. This proactive stance not only reduces remediation costs but strengthens the collaborative relationship between security and development stakeholders. By embracing threat modeling as a key focus of your security strategy, you not only prepare for current AI-enhanced phishing techniques but develop an adaptive framework that can evolve alongside emerging threats.

Looking Ahead

There is no silver bullet to defend against the AI-powered phishing threats described in this article , but threat modeling offers a valuable approach. By systematically examining assets, potential attackers, and possible attack vectors, organisations can better understand their specific vulnerabilities to phishing attacks, including those enhanced by AI. This understanding often helps teams prioritise defences where they’re most needed.

Combining threat modeling with occasional red team exercises that simulate AI-powered attacks can provide valuable insights. These simulations typically reveal unexpected vulnerabilities and help security teams refine their detection and response capabilities.

As attackers increasingly combine text, image, audio, and interactive AI capabilities, these proactive approaches may enable security teams to adapt their defences more effectively. The goal isn’t perfect security, but rather a shift toward preparation that can help organisations build resilience in the face of evolving threats.